Production Scrapers That Actually Deliver

A physics enthusiast who found his calling in solving impossible scraping challenges for D2C brands and lead gen agencies

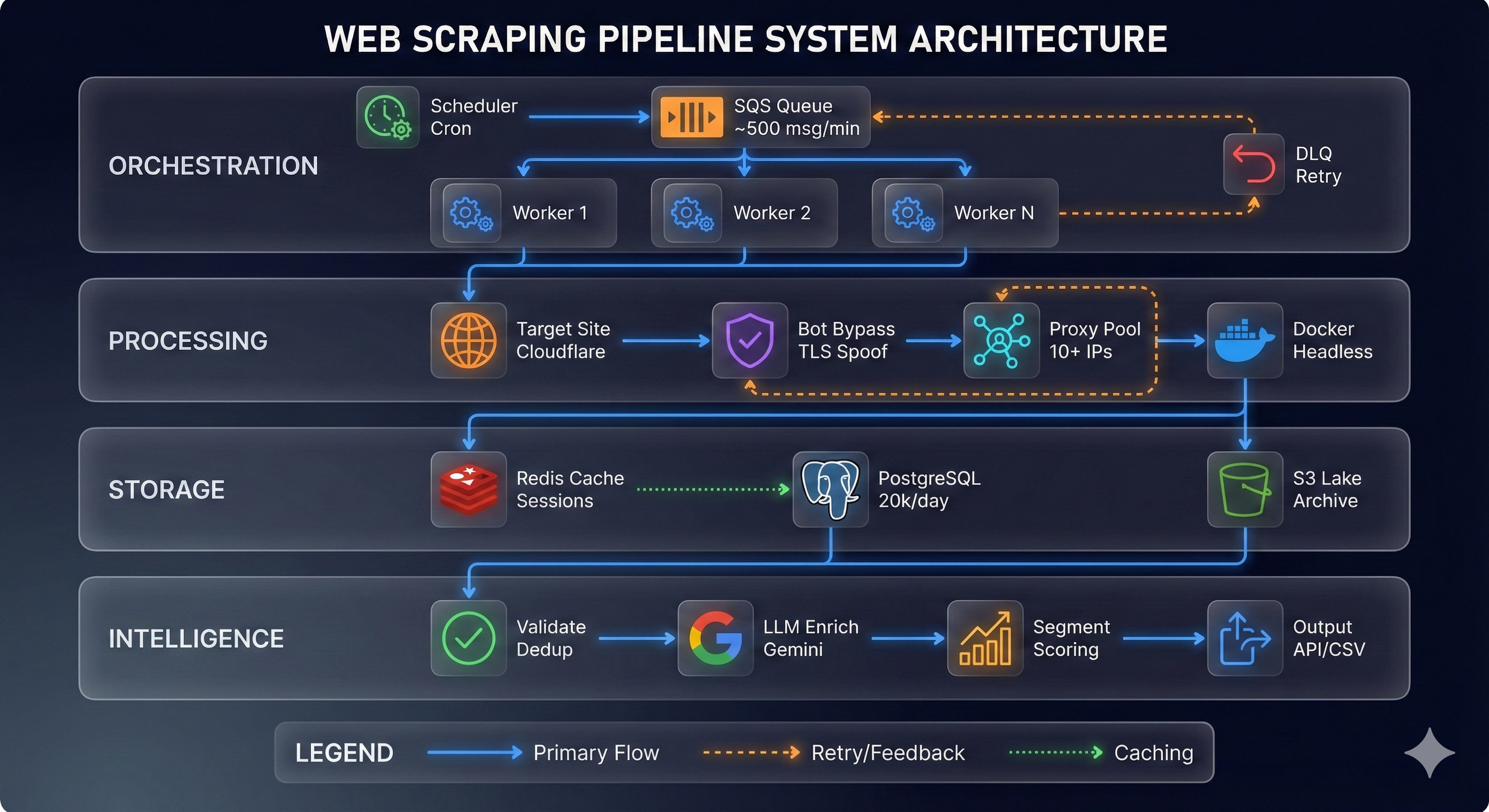

That kid obsessed with Einstein and Feynman? Now I'm building production scrapers that extract millions of records while bypassing the toughest anti-bot systems. 50+ production systems built. Co-founder @ CubikTech.